|

Art is Long; Time is Fleeting! I am a Machine Learning Researcher @ Huawei, Canada. I work on video understanding and online learning problems. Previously, I was a graduate student at University of Alberta, where I worked on continual learning. Email / CV / Google Scholar / Github |

|

|

I am interested in problems in perception and learning, particularly in learning from videos. Some (interesting) papers are highlighted. |

|

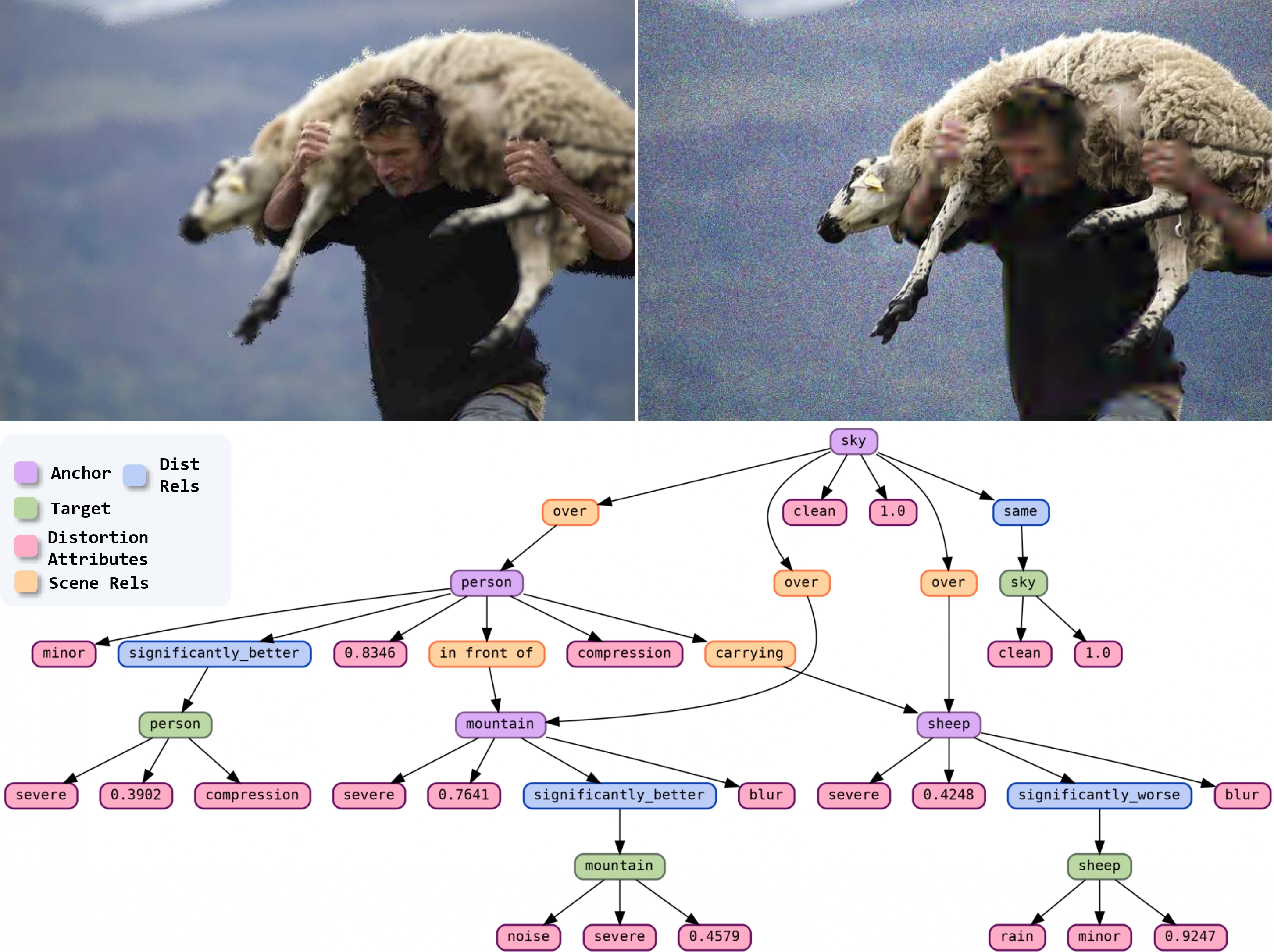

Muhammad Kamran Janjua, Abdul Wahab, Bahador Rashidi International Conference on Learning Representations (ICLR), 2026 We propose a novel task of Distortion Graph (DG). DG treats paired images as a structured topology grounded in regions, and represents dense degradation information such as distortion type, severity, comparison and quality score in a compact interpretable graph structure. |

|

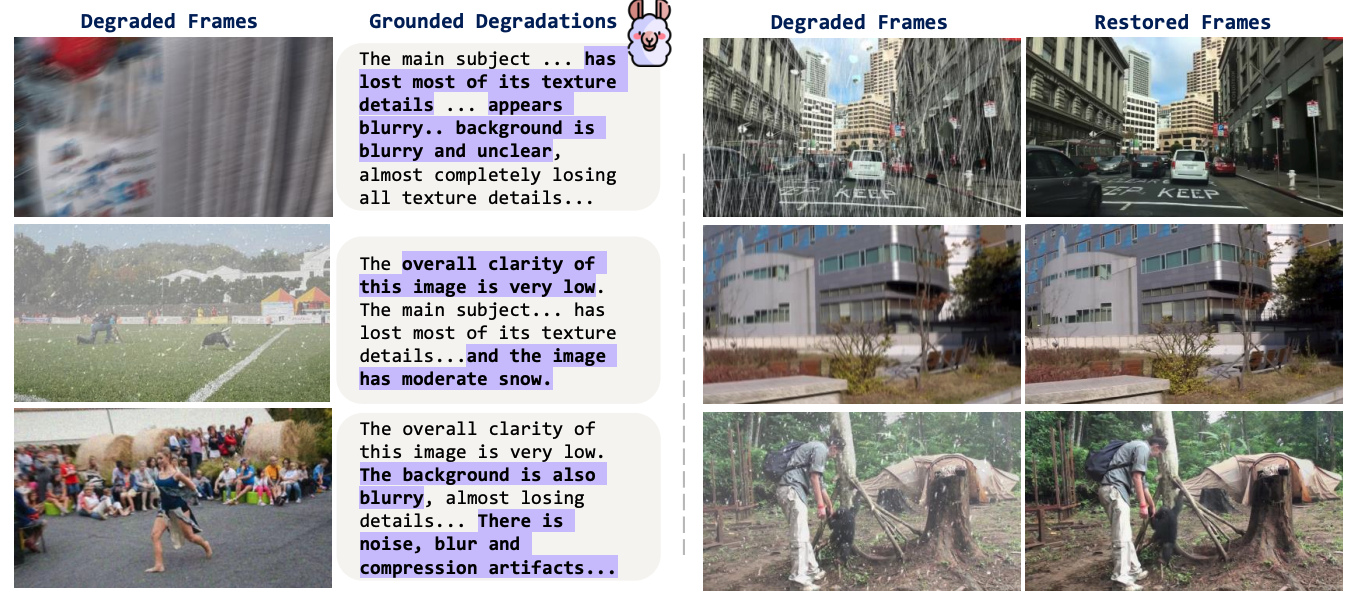

Muhammad Kamran Janjua, Amirhosein Ghasemabadi, Kunlin Zhang, Mohammad Salameh, Chao Gao, Di Niu IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), 2026 We propose an all-in-one video restoration framework that grounds degradation-aware semantic context of video frames in natural language via foundation models, offering interpretable and flexible guidance. |

|

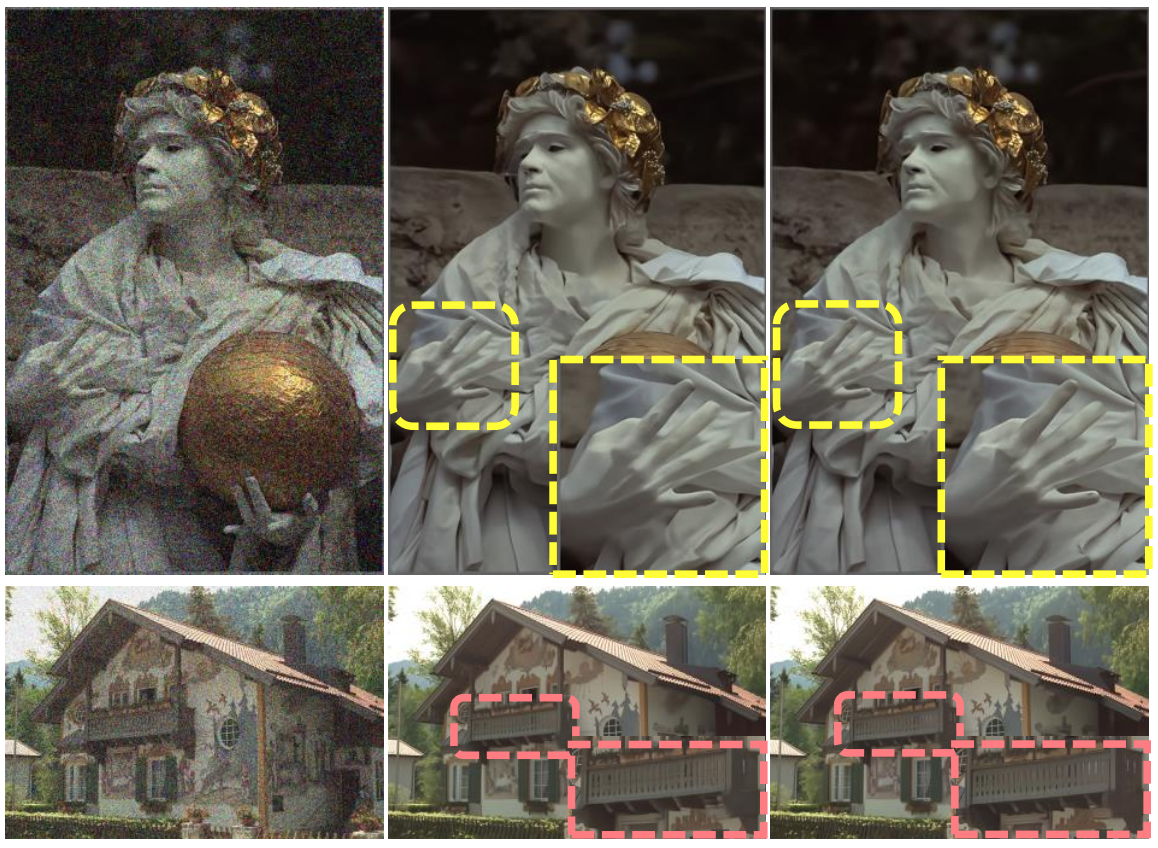

Amirhosein Ghasemabadi*, Muhammad Kamran Janjua*, Mohammad Salameh, Di Niu (* Equal Contribution) Neural Information Processing Systems (NeurIPS), 2024 We propose Turtle to learn the truncated causal history model for online video processing. The causal design in Turtle enables recurrence in inference through state-memorized historical features while allowing parallel training by sampling truncated video clips. We report new state-of-the-art results on a multitude of video restoration benchmark tasks. |

|

Amirhosein Ghasemabadi, Muhammad Kamran Janjua, Mohammad Salameh, Chunhua Zhou, Fengyu Sun, Di Niu Transactions on Machine Learning Research (TMLR), 2024 We present CascadedGaze Network (CGNet), an encoder-decoder architecture that employs Global Context Extractor (GCE), a novel and efficient way to learn global information for image restoration. |

|

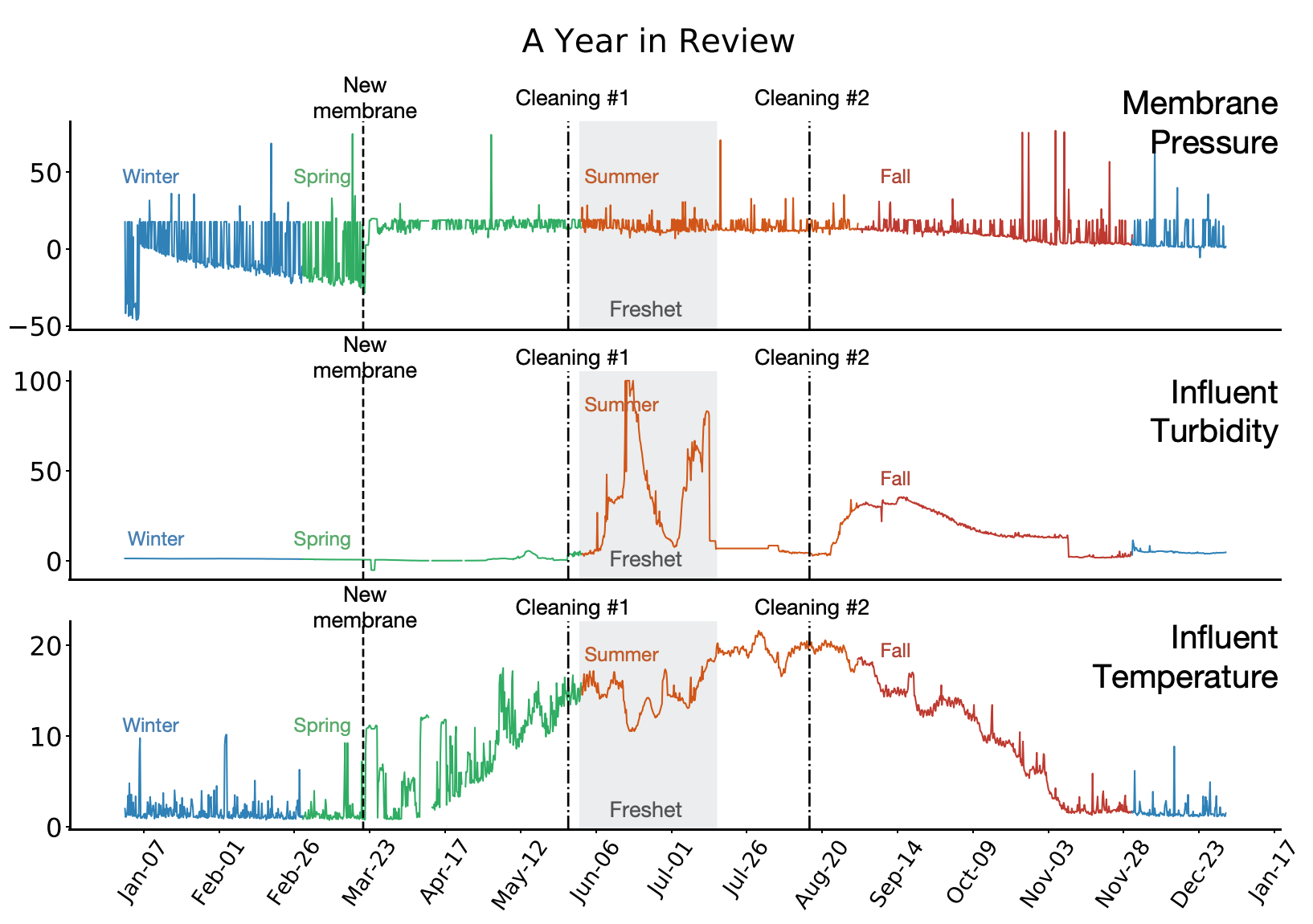

Muhammad Kamran Janjua, Haseeb Shah, Martha White, Erfan Miahi, Marlos C Machado, Adam White Machine Learning, 2023 We show the importance of learning in deployment, by comparing a TD agent trained purely offline with no online updating to a TD agent that learns online. This final result is one of the first to motivate the importance of adapting predictions in real-time, for non-stationary high-volume systems in the real world. |

|

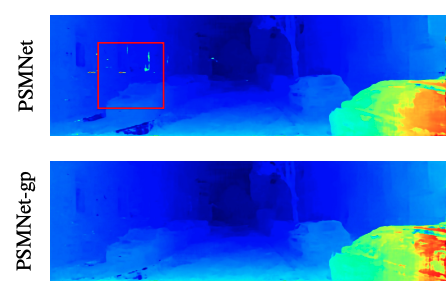

Yuxin Hou, Muhammad Kamran Janjua, Juho Kannala, Arno Solin 25th International Conference on Pattern Recognition (ICPR), 2020 We propose a method for fusing stereo disparity estimation with movement-induced prior information. |

|

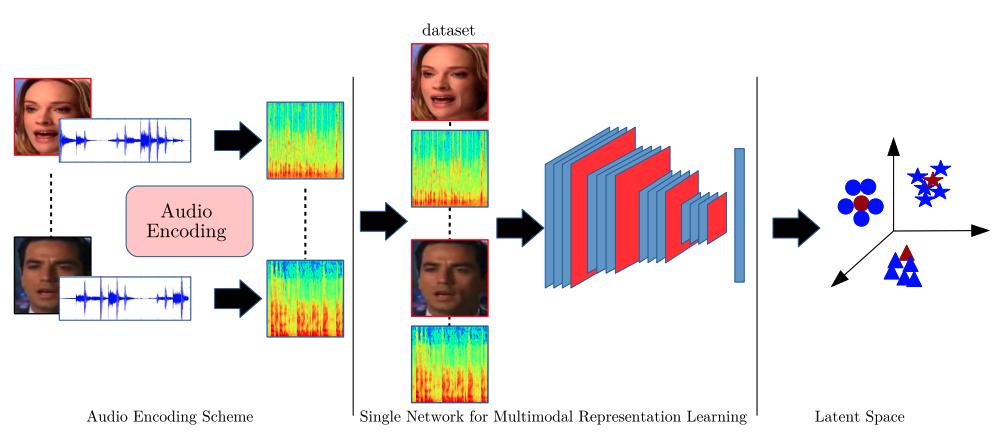

Muhammad Kamran Janjua*, Shah Nawaz*, Ignazio Gallo, Arif Mahmood, Alessandro Calefati Digital Image Computing: Techniques and Applications (DICTA), 2019 We propose to learn a joint representation of audio and visual information without relying on multimodal pairs or triplets for matching, verficiation, and retrieval tasks. |

|

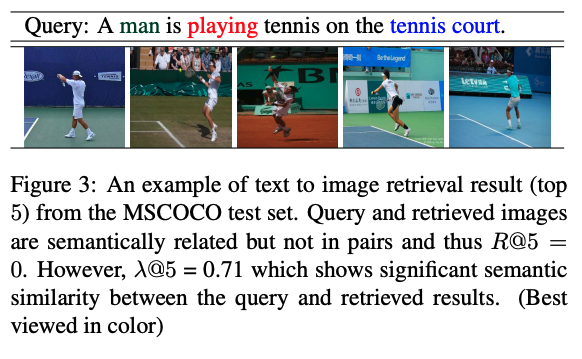

Shah Nawaz, Muhammad Kamran Janjua, Ignazio Gallo, Arif Mahmood, Alessandro Calefati, Faisal Shafait Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops (ICCVW), 2019 We propose a new measure SemanticMap to evaluate the performance of cross modal systems. Our proposed measure evaluates the semantic similarity between the image and text representations in the latent embedding space. |

|

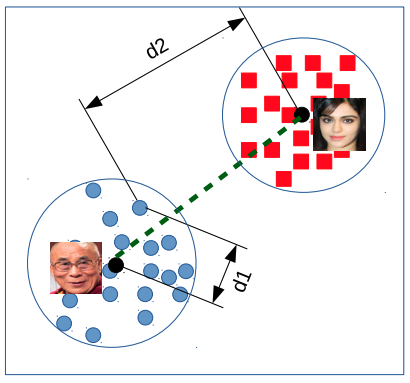

Alessandro Calefati*, Muhammad Kamran Janjua*, Shah Nawaz, Ignazio Gallo (* Equal Contribution) British Machine Vision Conference (BMVC), 2018 In order to further enhance the discriminative capability of deep features, we introduce a joint supervision signal, Git loss, which leverages on softmax and center loss functions. The aim of our loss function is to minimize the intra-class variations as well as maximize the inter-class distances. |

|

|

|

Muhammad Kamran Janjua Multimodal Weekly @ TwelveLabs, April 2025 |